The Invisible Mirror: When AI Reflects Our Prejudices

For years, the mantra of the AI community was “data is the new oil.” In the race to build the most powerful models, researchers scrambled to scrape billions of images from the open web, operating under the assumption that more data inherently leads to better intelligence. However, as AI systems moved from academic benchmarks to real-world deployment—affecting everything from job recruitment to criminal justice—a darker reality emerged.

Artificial Intelligence does not see the world as it is; it sees the world as it is represented in its training data. If that data is skewed, stereotypical, or exclusionary, the AI becomes an automated echo chamber for human prejudice.

In this article, we explore the origins of bias in visual datasets, the ethical reckoning of landmark projects like ImageNet, and the technological solutions being developed to build a fairer, more trustworthy future for computer vision.

1. The Anatomy of Bias: Where Does It Begin?

Bias in AI is rarely the result of malicious intent by engineers. Instead, it is a multi-layered issue that seeps into the system at various stages of development.

Representation Bias

This occurs when certain demographics, geographic regions, or cultures are under-represented. Historically, many large-scale visual datasets were scraped primarily from Western-centric websites. As a result, models often struggle to identify everyday objects from the Global South—for example, misidentifying a traditional wedding dress from India as “costume” while recognizing a Western white gown as “bride.”

Annotation Bias

Datasets require human labels. The people performing this labeling (often underpaid crowd-workers) bring their own cultural contexts and implicit biases to the task. If a labeler perceives a certain facial expression as “aggressive” due to racial stereotypes, that prejudice becomes hard-coded into the model’s understanding of human emotion.

Historical Bias

Even with perfect representation, data often reflects historical inequities. If a dataset of “CEOs” is scraped from historical archives, it will predominantly feature white men. An AI trained on this data might learn the incorrect correlation that being a “CEO” is a gendered or racial trait.

2. The ImageNet Reckoning: A Case Study in Evolution

As the foundational dataset of modern AI, ImageNet became the center of a major ethical debate in 2019. The project “Excavating AI” by Kate Crawford and Trevor Paglen revealed that the “Person” category in ImageNet contained thousands of labels that were derogatory, misogynistic, or based on pseudoscientific phrenology.

The Response: “Cleaning” the Legacy

The ImageNet team, led by Fei-Fei Li, took decisive action rather than ignoring the critique. They:

- Removed over 600,000 images from the “Person” category.

- Deleted offensive and non-visual labels (e.g., “bad person,” “debtor”).

- Introduced a more rigorous auditing process for how humans are categorized.

This moment was a turning point for the industry, signaling that technical excellence is inseparable from ethical responsibility.

3. The Generative Era: New Frontiers of Bias

With the rise of Generative AI (like Stable Diffusion and Midjourney), the stakes of visual bias have escalated. Because these models “create” rather than just “classify,” they can generate hyper-realistic stereotypes that feel like objective truths.

- Stereotype Amplification: When asked to generate an image of a “doctor,” many models default to older white males. Conversely, prompts for “janitor” or “criminal” often skew toward marginalized groups.

- Deepfakes and Consent: The ability to generate realistic human likenesses has raised profound questions about digital consent and the potential for AI-driven disinformation campaigns.

4. Engineering Fairness: Solutions for the Future

Building ethical AI is not just a philosophical challenge; it is an engineering one. Several key strategies are emerging to mitigate bias:

Diversifying the Data Source

Researchers are now intentionally curating datasets that represent a global perspective. Projects like “Inclusive Images” challenge models to recognize objects and scenes from diverse cultural contexts, reducing the “Western-gaze” of AI.

Algorithmic De-biasing

New training techniques, such as Adversarial Debiasing, involve training a secondary “adversary” model that tries to predict protected attributes (like race or gender) from the primary model’s internal representations. If the adversary succeeds, the primary model is penalized, forcing it to “unlearn” those correlations.

Synthetic Data for Balance

When real-world data is lacking for certain groups, Synthetic Data can be used to fill the gaps. By generating high-fidelity images of under-represented demographics, engineers can create a balanced training set that ensures the model performs equally well for everyone.

Human-in-the-Loop and Auditing

Third-party ethical audits and “Red Teaming” (where experts intentionally try to make the AI fail) are becoming standard practice. Transparency reports and “Data Cards”—which document the origins and limitations of a dataset—are also gaining traction.

5. Conclusion: Trust as the Ultimate Metric

The future of AI will not be judged solely by its accuracy on a leaderboard, but by its ability to serve all of humanity without harm. As we integrate Vision AI into the fabric of our society, the “human” element of the data becomes more important than ever.

By acknowledging the biases of the past and implementing rigorous ethical frameworks today, we ensure that AI acts not as a distorting mirror, but as a clear window into a more equitable world. The legacy of ImageNet continues, not just as a benchmark for recognition, but as a lesson in the ongoing pursuit of Responsible AI.

FAQ: AI Ethics & Bias

Q: Can AI ever be completely free of bias? A: Total neutrality is difficult because all data is created by humans. However, through rigorous auditing and de-biasing techniques, we can significantly reduce harmful biases to a level that is safe for society.

Q: Why was ImageNet criticized for its “Person” category? A: Because many labels were subjective or derogatory (e.g., classifying people based on character traits instead of visual facts), which could lead AI to make judgmental and unfair classifications.

Q: What is “Synthetic Data” in the context of ethics? A: It is artificially generated data used to supplement under-represented groups in a dataset, ensuring the AI performs accurately for all demographics, not just the majority.

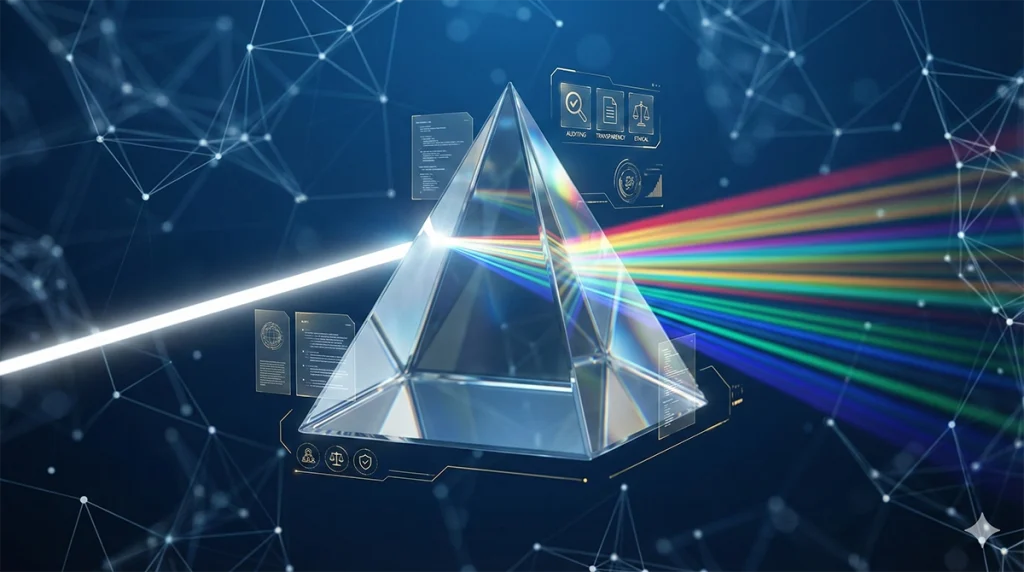

Visual Concept Suggestion: A symbolic image of a glass prism. On one side, a single beam of light (representing raw data) enters, and on the other side, it refracts into a spectrum of diverse, multi-colored light (representing a balanced and fair AI output). The background is a clean, dark blue “tech” environment with glowing white digital nodes connecting the light beams.

References

- Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification

- Source: FAT* 2018 (Joy Buolamwini, Timnit Gebru)

- URL: http://gendershades.org/overview.html

- Model Cards for Model Reporting

- Source: FAT* 2019 (Google Research)

- URL: https://arxiv.org/abs/1810.03993