In the early days of deep learning, training a robust computer vision model was a luxury reserved for those with massive datasets and supercomputing clusters. Today, a developer can build a world-class classifier with only a few hundred images and a standard laptop. This democratization of AI is made possible by a single, powerful concept: Transfer Learning.

Transfer learning is the practice of taking a model trained on a massive, general task and repurposing it for a specific, niche task. It is the digital equivalent of a human using their knowledge of riding a bicycle to learn how to ride a motorcycle—you don’t start from scratch; you adapt what you already know.

1. The Philosophy of Reusable Intelligence

The core premise of transfer learning is that the early layers of a Convolutional Neural Network (CNN) or a Vision Transformer (ViT) learn universal visual features.

Regardless of whether a model is looking at a medical X-ray or a self-driving car feed, the fundamental building blocks of vision are the same. This is where the legacy of ImageNet becomes vital:

- Early Layers: Detect simple edges, colors, and textures. These are universal across almost all images.

- Middle Layers: Combine edges into shapes, patterns, and parts of objects (e.g., circles, honeycombs).

- Final Layers: Combine these parts into high-level semantic concepts (e.g., “a golden retriever” or “a stop sign”).

By using a model pre-trained on a dataset like ImageNet, we essentially “borrow” a visual cortex that already understands how the world looks, allowing us to focus only on the final, specialized logic.

2. The Mechanics: How Transfer Learning Works

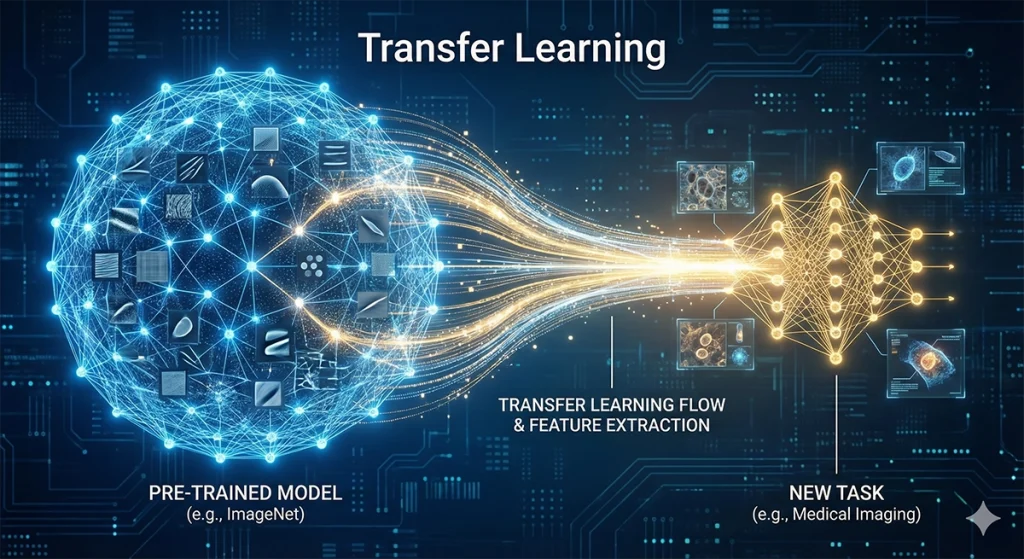

When we apply transfer learning, we typically follow a structured process to transition from a Source Task (general) to a Target Task (specific).

Step A: Selecting a Pre-trained Backbone

Common choices include architectures like ResNet, EfficientNet, or Swin Transformers. These models have already spent weeks training on millions of images, optimizing millions of parameters to achieve a deep understanding of visual hierarchy.

Step B: Freezing vs. Fine-tuning

This is the most critical technical decision in the pipeline:

- Freezing Layers: We “lock” the weights of the early and middle layers so they do not change during training. We only train the new “head” (the final classification layer) of the model. This is ideal when the new dataset is small.

- Fine-tuning: We allow the weights of the pre-trained layers to be updated slightly with an extremely low learning rate. This allows the model to subtly adjust its “visual cortex” to the specific textures of the new task, such as microscopic cells or satellite terrain.

3. Strategy Matrix: When to Use Transfer Learning?

The strategy changes based on two primary variables: the size of your dataset and its similarity to the original source dataset.

| Dataset Scenario | Recommended Strategy |

|---|---|

| Small & Similar (e.g., identifying breeds of cats) | Freeze the backbone; train only the final layer. |

| Small & Different (e.g., specialized medical imagery) | Retrain from deeper layers, but keep early feature extractors. |

| Large & Similar | Fine-tune the entire network to reach peak state-of-the-art accuracy. |

| Large & Different | Pre-training still provides a better starting point than random initialization. |

4. The Benefits: Speed, Cost, and Accuracy

Transfer learning is no longer just a convenience; it is a necessity for sustainable AI development.

- Data Efficiency: It allows for high-performance models even when you have fewer than 100 labeled images per class.

- Computational Savings: Training from scratch on ImageNet takes days on expensive GPU clusters; fine-tuning takes minutes on a consumer-grade GPU.

- Superior Generalization: Pre-trained models often generalize better to real-world “noise” because they have been exposed to the vast diversity of the original training set.

5. The Modern Era: Foundation Models and CLIP

As we move toward 2026, transfer learning has evolved into the era of Foundation Models. Models like CLIP (Contrastive Language-Image Pre-training) have been trained on billions of image-text pairs from across the internet.

Instead of just transferring “shapes,” these models transfer semantic understanding. This enables “Zero-shot Learning,” where a model can recognize objects it has never seen in its specific training set simply by understanding the linguistic description of that object.

Conclusion: The Bridge to Specificity

Transfer learning represents the bridge between the “General Intelligence” of massive datasets and the “Specialized Intelligence” required for real-world applications. By standing on the shoulders of giants—the researchers and datasets that came before—modern AI developers can focus on solving human problems rather than reinventing the visual wheel.

In the story of imagin.net, transfer learning is the key that unlocked the potential of ImageNet for every industry, from healthcare to environmental conservation.

References

- How transferable are features in deep neural networks?

- Source: NeurIPS 2014

- URL: https://arxiv.org/abs/1411.1792

- Hugging Face Model Hub (Pre-trained Models)

- Source: Hugging Face

- URL: https://huggingface.co/models